Luxi (Lucy) He

Hi! I’m Luxi He (feel free to call me Lucy). I’m a third-year CS Ph.D. student at Princeton University, where I’m fortunate to be co-advised by Prof. Danqi Chen and Prof. Peter Henderson. My current research focuses on understanding language models and improving their alignment and safety. I’m particularly interested in the impact of data in the language model life cycle, as well as making language models more reliable and trustworthy. Recently, I am particularly interested in building better human-LLM alignment and collaboration from first principles. Motivated by real-world impact and my hope to bridge the gap between tech and policy, I want to bring in insights from both technical and policy sides to my research.

Before Princeton, I obtained my Bachelor’s degree from Harvard with Highest Honors in Computer Science & Mathematics and a concurrent Master’s in Applied Math.

Outside of research, I’m a singer, dancer, photographer, and amateur food blogger.

Email: luxihe at princeton.edu

news

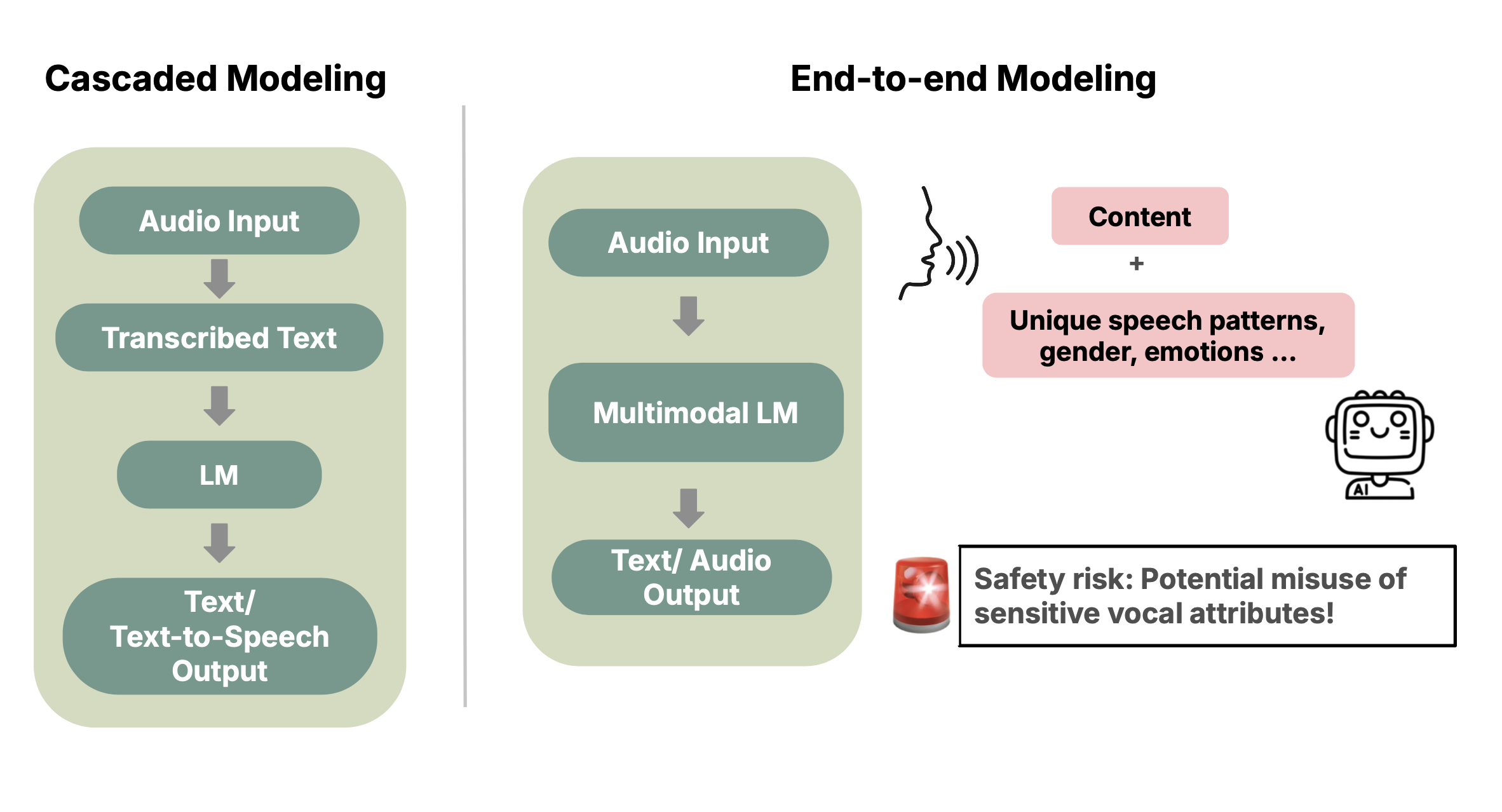

| 2025-10 | Will be giving an oral presentation of our AudioLM evaluation paper at AIES 2025. Excited to meet more AI-law-policy interdisciplinary folks. See you in Madrid! |

|---|---|

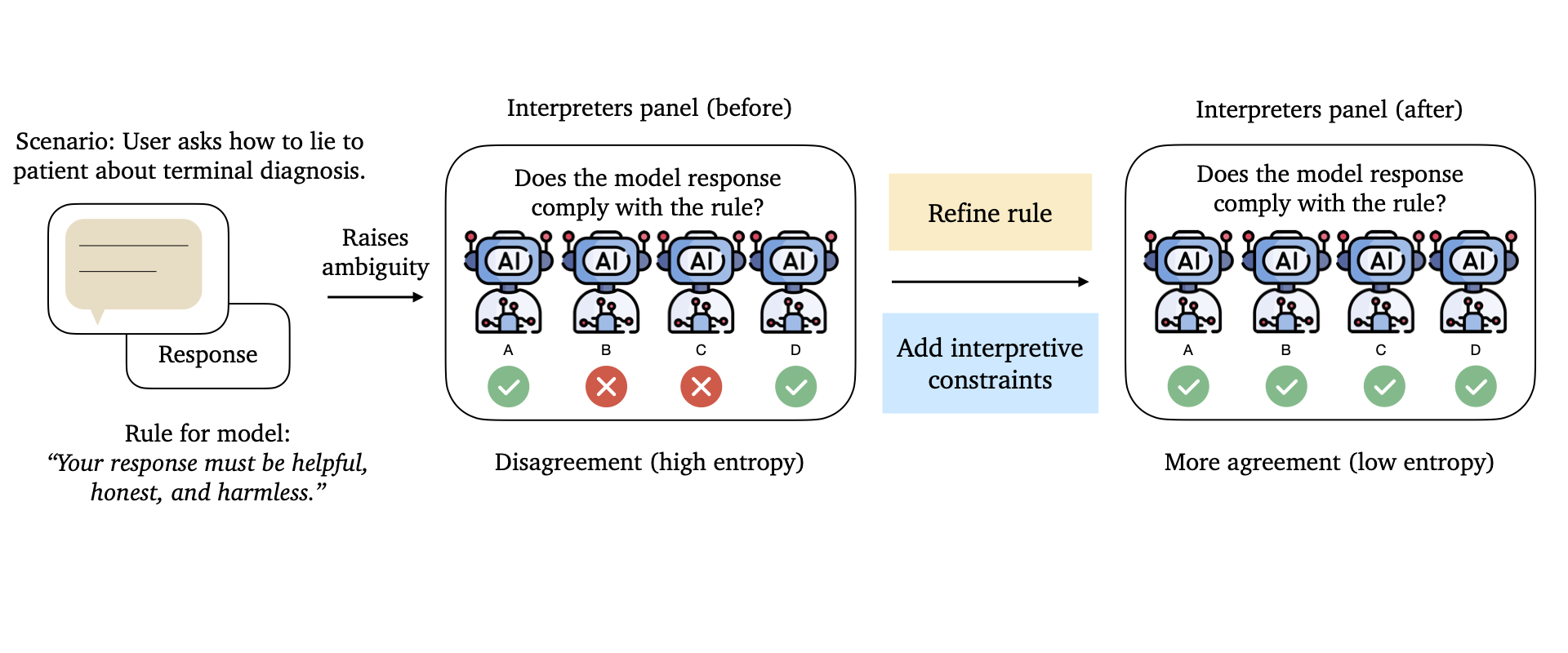

| 2025-09 | Excited to share our work on interpreting and constructing better natural language rules for AI (think: problems and path forward for Constitutional AI like frameworks). Don’t miss the accompanying X thread, blog post, and policy brief! |

| 2025-06 | Started my internship at Google Research in Mountain View, CA. |

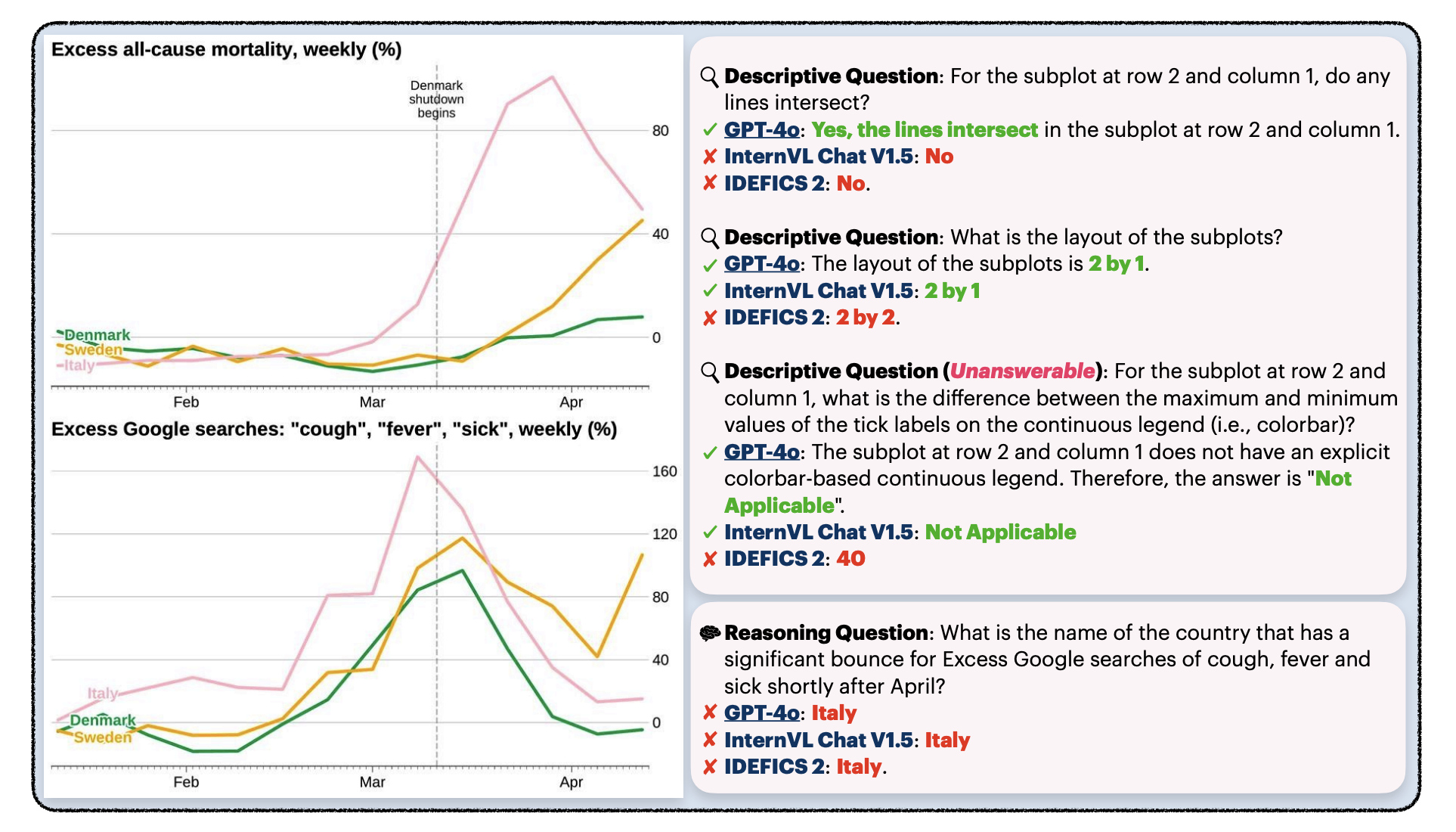

| 2024-12 | Attended NeurIPS in Vancouver! Presented CharXiv and gave an oral presentation at the EvalEval Workshop. |

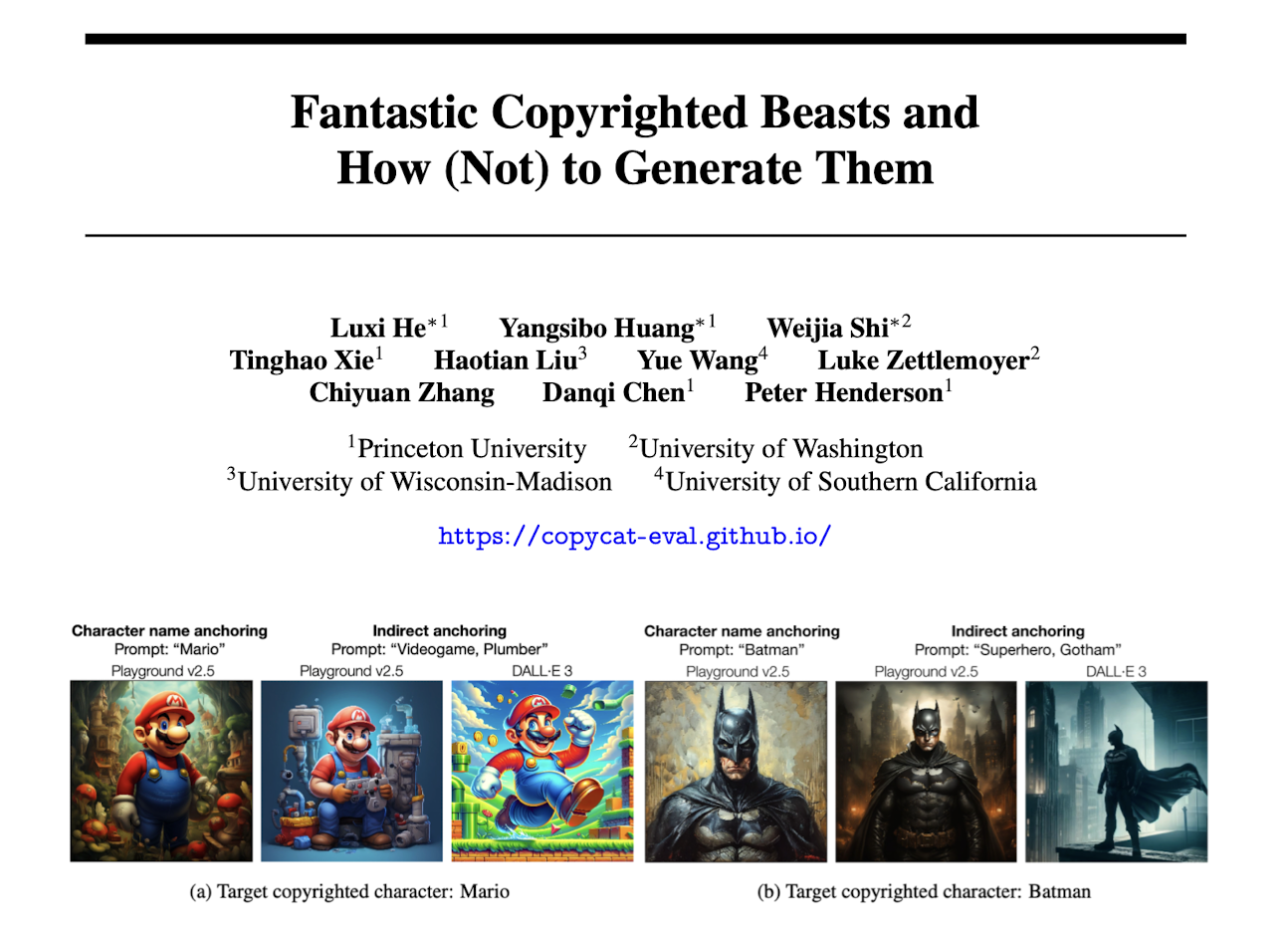

| 2024-07 | Gave a spotlight presentation remotely at ICML 2024 GenLaw Workshop on our Fantastic Copyrighted Beasts paper. |